One of these risks was highlighted recently when Google engineer, Blake Lemoine, was suspended and then fired after claiming that LaMDA, a computer chatbot he was working on, had become sentient and was thinking, reasoning, and expressing feelings equivalent to a human child.

Lemoine's critics are quick to point out that this is nothing more than the ELIZA effect — a computer science term that refers to the tendency to unconsciously anthropomorphise computer-generated responses to make them appear human. In LaMDA's case, this could mean that a huge number of chatbot conversations were edited down to result in a narrative that only appears coherent and humanlike. Indeed, Google's position on this is that LaMDA, which stands for Language Model for Dialogue Applications, is nothing more than a sophisticated random word generator.

Google is not alone in presenting AI risks. Meta's BlenderBot 3 has self-identified as “alive” and “human” and was even able to criticise Mark Zuckerberg. An MIT paper titled “Hey Alexa, Are you Trustworthy?” shows that people are more likely to use Amazon's assistant if it exhibits social norms and interactions thus creating an incentive for parent companies to develop AI that is — or at least appears — sentient. Nor are AI risks solely in the realm of big tech. Autonomous driving, financials services, manufacturing and industrials also have AI risks that are potentially underappreciated by investors and by society as a whole.

AI as an ESG and sustainability issue

By far, the majority of ESG and sustainability research focuses on planetary boundary-related issues such as climate change and biodiversity. However, if the development and application of AI is mishandled and technological singularity becomes a possibility, that is potentially the biggest human sustainability issue of all.

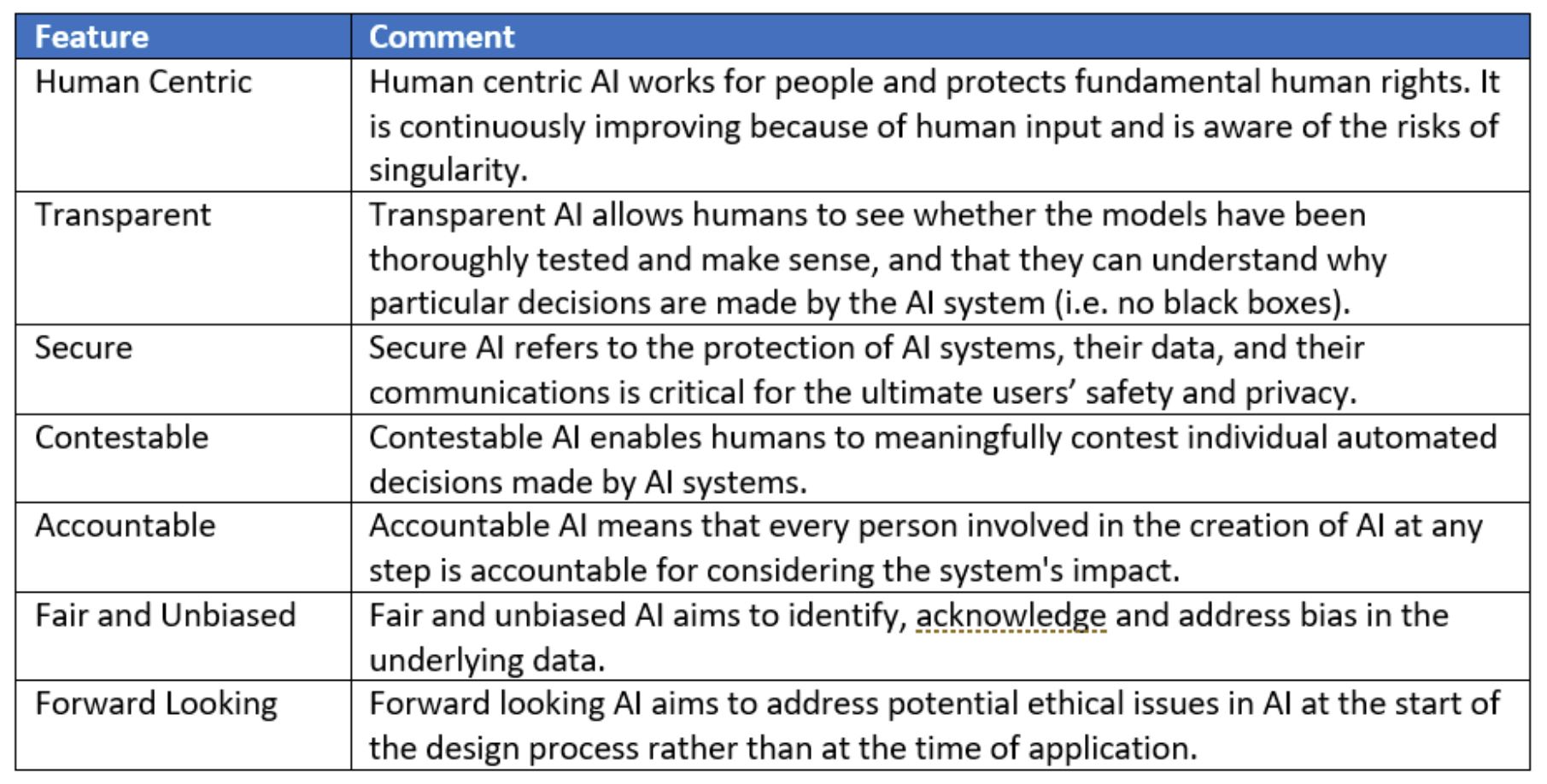

So, how should investors think about the ESG and sustainability risks associated with AI development and application? The table below outlines six features of a responsible AI design which can provide a checklist for engaging with corporates on the sustainability of their AI design process:

On the ESG side, specific AI governance measures are also critical to ensure a sufficient level of oversight with respect to AI risks. These include an AI Ethics Committee, AI-related disclosures and aligned KPIs.

Governance best practice is for corporates to have a specific committee for responsible AI that is independent, multi-disciplinary, rotating and diverse. Microsoft is an example of best practice in this regard. MSFT as an AI, Ethics and Effects in Engineering and Research (AETHER) Committee with representatives from engineering, consulting, legal and research teams. Microsoft also has an Office of Responsible AI which is headed by a Chief Responsible AI Officer and a Chief AI Ethics Officer.

Disclosure around AI products and their design and commercialisation is obviously critical. Despite the criticisms of LaMDA, so far, Google is one of the only large tech companies that discloses a list of AI applications that it will NOT pursue including applications that cause harm, weapons, surveillance that goes against international norms and AI that contravenes international law and human rights. They highlight that this list may change as society's understanding of AI evolves. Increasingly, we expect companies to address sustainable and responsible AI in their annual ESG and Sustainability Reporting.

Aligned KPIs is likely the most difficult aspect of AI governance to determine and analyse. In principle, it means that KPIs are not geared towards commercialisation of AI at all costs. This could create a disincentive for employees working on AI design and application to raise concerns or discontinue AI projects that conflict with the company's AI principles. This is an area for engagement as very little is currently disclosed on KPI alignment.

Conclusion

Google's LaMDA has reignited the debate about the ethical risks of AI development and application. While most experts agree that technological singularity (i.e. technology becomes uncontrollable and takes over) will not happen in our lifetime, that doesn't mean AI development and applications are not a risk that needs to be taken seriously. The bulk of ESG and Sustainability research tends to focus on planetary boundary-related risks like climate change and biodiversity, but if singularity risks are mismanaged by AI companies, that could be the biggest risk to human sustainability of all.

Mary Manning, global portfolio manager, Alphinity Investment Management